扩展

# 基本信息

扩展是一种更方便的用户脚本形式。

扩展都存在于 extensions 目录中它们自己的子目录中。你可以像这样使用 git 安装扩展:

git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui-aesthetic-gradients extensions/aesthetic-gradients

这会将来自 https://github.com/AUTOMATIC1111/stable-diffusion-webui-aesthetic-gradients 的扩展安装到 extensions/aesthetic-gradients 目录中。

或者,您可以将目录复制粘贴到“extensions”中。

关于开发扩展,参见开发扩展。

# 安全

由于扩展允许用户安装和运行任意代码,这可能会被恶意使用,并且在使用允许远程用户连接到服务器的选项(--share 或 --listen)运行时默认禁用 -您仍然拥有用户界面,但尝试安装任何东西都会导致错误。如果您想使用这些选项并且仍然能够安装扩展,请使用 --enable-insecure-extension-access 命令行标志。

扩展

审美渐变

https://github.com/AUTOMATIC1111/stable-diffusion-webui-aesthetic-gradients

从一张或几张图片创建嵌入,并使用它将其样式应用于生成的图像。

通配符

https://github.com/AUTOMATIC1111/stable-diffusion-webui-wildcards

允许您在提示中使用 name 语法从通配符目录中名为 name.txt 的文件中获取随机行。

动态提示

https://github.com/adieyal/sd-dynamic-prompts

AUTOMATIC1111/stable-diffusion-webui 的自定义扩展,实现了用于随机或组合提示生成的表达模板语言以及支持深度通配符目录的功能结构。

自述文件 中显示了更多功能和新增功能。

使用这个扩展,提示:

| {2$$artist1 | artist2 | artist3} 的{夏季 | 冬季 | 秋季 | 春季}的{房子 | 公寓 | 旅馆 | 小屋}` |

会出现以下任一提示:

- 艺术家 1、艺术家 2 的夏季房屋

- 艺术家 3、艺术家 1 的秋天小屋

- 艺术家 2、艺术家 3 的冬季小屋

- …

如果您正在寻找艺术家和风格的有趣组合,这将特别有用。

您还可以从文件中选择一个随机字符串。假设您在 WILDCARD_DIR 中有文件 seasons.txt(见下文),那么:

__seasons__ 来了

可能会生成以下内容:

- 冬天来了

- 春天来了

- …

您也可以两次使用相同的通配符

我爱__seasons__胜过__seasons__

- 比起夏天我更喜欢冬天

- 我爱春天胜过春天

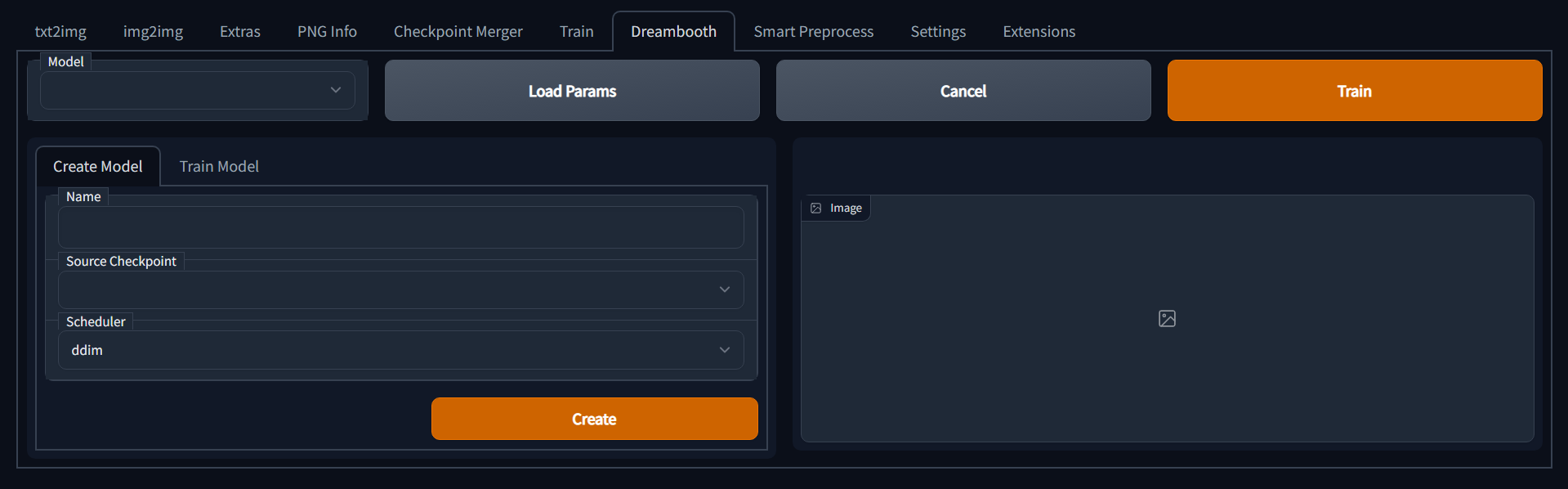

梦想亭

https://github.com/d8ahazard/sd_dreambooth_extension

用户界面中的 Dreambooth。有关调整和配置要求,请参阅项目自述文件。包括 LoRA(低阶适应)

基于 ShivamShiaro 的回购协议。

智能流程

https://github.com/d8ahazard/sd_smartprocess

智能裁剪、字幕和图像增强。

图像浏览器

https://github.com/yfszzx/stable-diffusion-webui-images-browser

提供在网络浏览器中浏览创建的图像的界面。

## 灵感 https://github.com/yfszzx/stable-diffusion-webui-inspiration

随机显示艺术家或艺术流派典型风格的图片,选择后显示更多该艺术家或流派的图片。所以你在创作时不必担心选择合适的艺术风格有多难。

## 以下 https://github.com/deforum-art/deforum-for-automatic1111-webui

Deforum 的官方端口,一个用于 2D 和 3D 动画的扩展脚本,支持关键帧序列、动态数学参数(甚至在提示内)、动态遮罩、深度估计和变形。

艺术家学习

https://github.com/camenduru/stable-diffusion-webui-artists-to-study

https://artiststostudy.pages.dev/ 适用于 [web ui] 的扩展(https://github.com/AUTOMATIC1111/stable-diffusion-webui)。

要安装它,请将 repo 克隆到 extensions 目录并重新启动 web ui:

git clone https://github.com/camenduru/stable-diffusion-webui-artists-to-study

您可以通过单击将艺术家姓名添加到剪贴板。 (感谢 @gmaciocci 的想法)

审美形象得分手

https://github.com/tsngo/stable-diffusion-webui-aesthetic-image-scorer

https://github.com/AUTOMATIC1111/stable-diffusion-webui 的扩展

基于 Chad Scorer 使用 CLIP+MLP 美学分数预测器 计算生成图像的美学分数-乍得/blob/main/chad_scorer.py)

参见讨论

将分数保存到 windows 标签,并计划其他选项

数据集标签编辑器

https://github.com/toshiaki1729/stable-diffusion-webui-dataset-tag-editor

这是 Stable Diffusion web UI by AUTOMATIC1111 训练数据集中编辑字幕的扩展。

它适用于逗号分隔样式的文本标题(例如 DeepBooru 审讯器生成的标签)。

可以加载图片文件名中的字幕,但编辑后的字幕只能以文本文件的形式保存。

自动 sd-paint-ext

https://github.com/Interpause/auto-sd-paint-ext

以前称为“auto-sd-krita”。

使用 Krita 插件扩展 AUTOMATIC1111 的 webUI(其他绘图工作室即将推出?)

| 过时的演示 | 新用户界面(全部:演示图像) |

|---|---|

|

|

Differences

- UI no longer freezes during image update

- Inpainting layer no longer has to be manually hidden, nor use white specifically

- UI has been improved & squeezed further

- Scripts API is now possible

training-picker

https://github.com/Maurdekye/training-picker

Adds a tab to the webui that allows the user to automatically extract keyframes from video, and manually extract 512x512 crops of those frames for use in model training.

Installation

- Install AUTOMATIC1111’s Stable Diffusion Webui

- Install ffmpeg for your operating system

- Clone this repository into the extensions folder inside the webui

- Drop videos you want to extract cropped frames from into the training-picker/videos folder

Unprompted

https://github.com/ThereforeGames/unprompted

Supercharge your prompt workflow with this powerful scripting language!

Unprompted is a highly modular extension for AUTOMATIC1111’s Stable Diffusion Web UI that allows you to include various shortcodes in your prompts. You can pull text from files, set up your own variables, process text through conditional functions, and so much more - it’s like wildcards on steroids.

While the intended usecase is Stable Diffusion, this engine is also flexible enough to serve as an all-purpose text generator.

Booru tag autocompletion

https://github.com/DominikDoom/a1111-sd-webui-tagcomplete

Displays autocompletion hints for tags from “image booru” boards such as Danbooru. Uses local tag CSV files and includes a config for customization.

novelai-2-local-prompt

https://github.com/animerl/novelai-2-local-prompt

Add a button to convert the prompts used in NovelAI for use in the WebUI. In addition, add a button that allows you to recall a previously used prompt.

Tokenizer

https://github.com/AUTOMATIC1111/stable-diffusion-webui-tokenizer

Adds a tab that lets you preview how CLIP model would tokenize your text.

Push to 🤗 Hugging Face

https://github.com/camenduru/stable-diffusion-webui-huggingface

To install it, clone the repo into the extensions directory and restart the web ui:

git clone https://github.com/camenduru/stable-diffusion-webui-huggingface

pip install huggingface-hub

StylePile

https://github.com/some9000/StylePile

An easy way to mix and match elements to prompts that affect the style of the result.

Latent Mirroring

https://github.com/dfaker/SD-latent-mirroring

Applies mirroring and flips to the latent images to produce anything from subtle balanced compositions to perfect reflections

Embeddings editor

https://github.com/CodeExplode/stable-diffusion-webui-embedding-editor

Allows you to manually edit textual inversion embeddings using sliders.

seed travel

https://github.com/yownas/seed_travel

Small script for AUTOMATIC1111/stable-diffusion-webui to create images that exists between seeds.

shift-attention

https://github.com/yownas/shift-attention

Generate a sequence of images shifting attention in the prompt. This script enables you to give a range to the weight of tokens in a prompt and then generate a sequence of images stepping from the first one to the second.

<https://user-images.githubusercontent.com/13150150/193368939-c0a57440-1955-417c-898a-ccd102e207a5.mp4

prompt travel

https://github.com/Kahsolt/stable-diffusion-webui-prompt-travel

Extension script for AUTOMATIC1111/stable-diffusion-webui to travel between prompts in latent space.

Sonar

https://github.com/Kahsolt/stable-diffusion-webui-sonar

Improve the generated image quality, searches for similar (yet even better!) images in the neighborhood of some known image, focuses on single prompt optimization rather than traveling between multiple prompts.

Detection Detailer

https://github.com/dustysys/ddetailer

An object detection and auto-mask extension for Stable Diffusion web UI.

conditioning-highres-fix

https://github.com/klimaleksus/stable-diffusion-webui-conditioning-highres-fix

This is Extension for rewriting Inpainting conditioning mask strength value relative to Denoising strength at runtime. This is useful for Inpainting models such as sd-v1-5-inpainting.ckpt

Randomize

https://github.com/stysmmaker/stable-diffusion-webui-randomize

Allows for random parameters during txt2img generation. This script is processed for all generations, regardless of the script selected, meaning this script will function with others as well, such as AUTOMATIC1111/stable-diffusion-webui-wildcards.

Auto TLS-HTTPS

https://github.com/papuSpartan/stable-diffusion-webui-auto-tls-https

Allows you to easily, or even completely automatically start using HTTPS.

DreamArtist

https://github.com/7eu7d7/DreamArtist-sd-webui-extension

Towards Controllable One-Shot Text-to-Image Generation via Contrastive Prompt-Tuning.

WD 1.4 Tagger

https://github.com/toriato/stable-diffusion-webui-wd14-tagger

Uses a trained model file, produces WD 1.4 Tags. Model link - https://mega.nz/file/ptA2jSSB#G4INKHQG2x2pGAVQBn-yd_U5dMgevGF8YYM9CR_R1SY

booru2prompt

https://github.com/Malisius/booru2prompt

This SD extension allows you to turn posts from various image boorus into stable diffusion prompts. It does so by pulling a list of tags down from their API. You can copy-paste in a link to the post you want yourself, or use the built-in search feature to do it all without leaving SD.

also see:

https://github.com/stysmmaker/stable-diffusion-webui-booru-prompt

gelbooru-prompt

https://github.com/antis0007/sd-webui-gelbooru-prompt

Fetch tags with image hash. Updates planned.

Merge Board

https://github.com/bbc-mc/sdweb-merge-board

Multiple lane merge support(up to 10). Save and Load your merging combination as Recipes, which is simple text.

also see:

https://github.com/Maurdekye/model-kitchen

Depth Maps

https://github.com/thygate/stable-diffusion-webui-depthmap-script

Creates depthmaps from the generated images. The result can be viewed on 3D or holographic devices like VR headsets or lookingglass display, used in Render or Game- Engines on a plane with a displacement modifier, and maybe even 3D printed.

multi-subject-render

https://github.com/Extraltodeus/multi-subject-render

It is a depth aware extension that can help to create multiple complex subjects on a single image. It generates a background, then multiple foreground subjects, cuts their backgrounds after a depth analysis, paste them onto the background and finally does an img2img for a clean finish.

depthmap2mask

https://github.com/Extraltodeus/depthmap2mask

Create masks for img2img based on a depth estimation made by MiDaS.

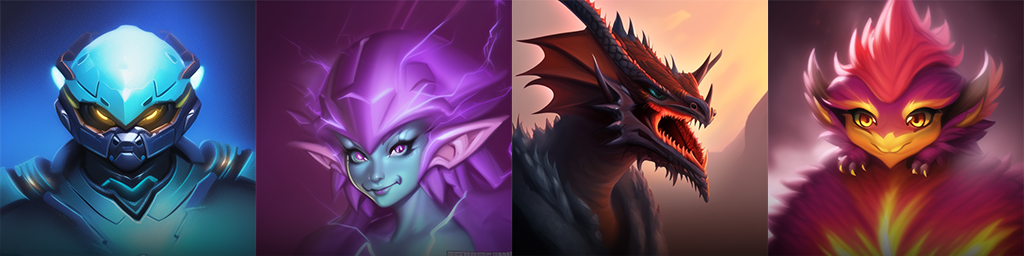

ABG_extension

https://github.com/KutsuyaYuki/ABG_extension

Automatically remove backgrounds. Uses an onnx model fine-tuned for anime images. Runs on GPU.

|

|

|

|

|---|---|---|---|

|

|

|

|

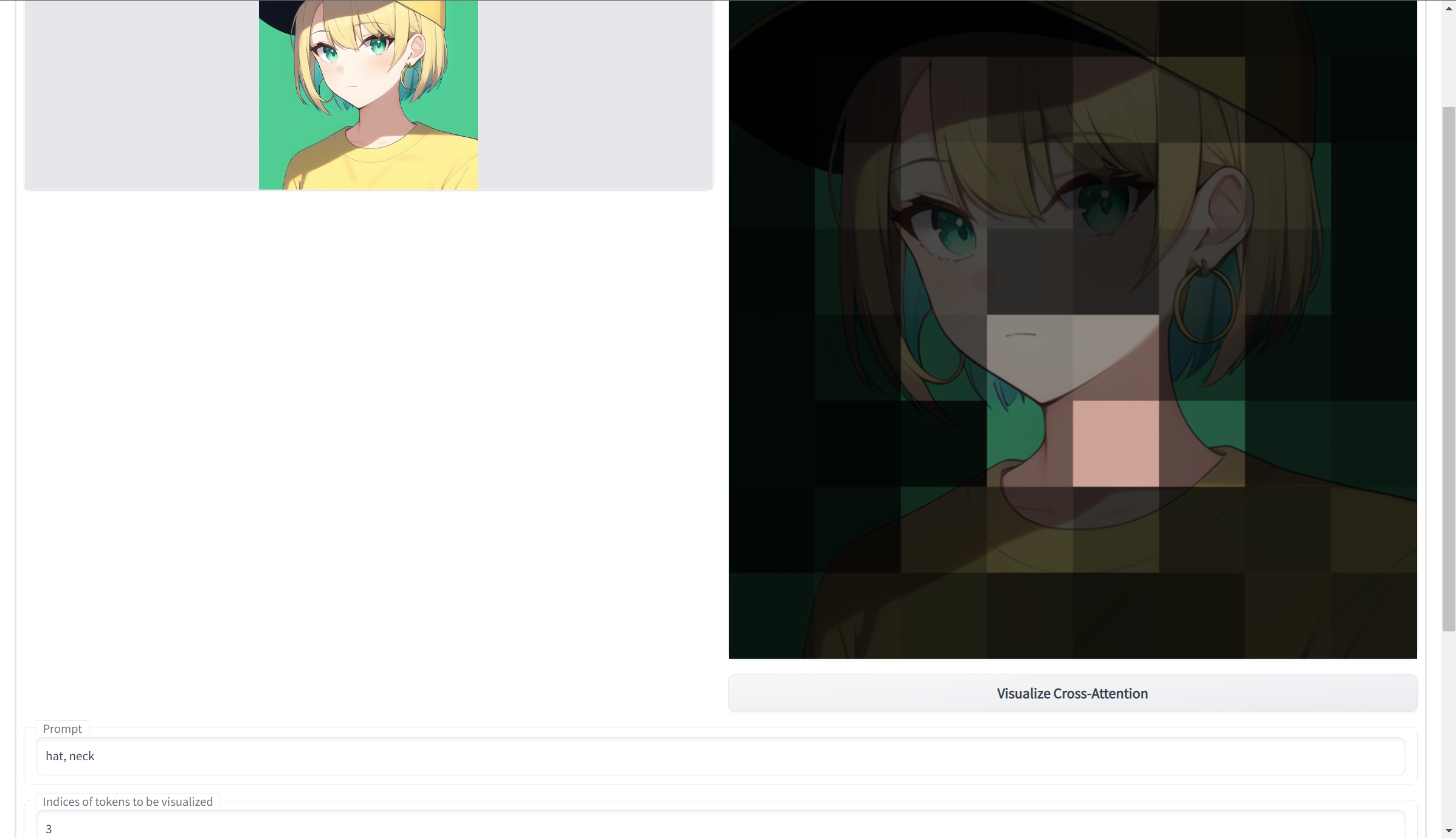

Visualize Cross-Attention

<https://github.com/benkyoujouzu/stable-diffusion-webui-visualize-cross-attention-extension

Generates highlighted sectors of a submitted input image, based on input prompts. Use with tokenizer extension. See the readme for more info.

DAAM

<https://github.com/kousw/stable-diffusion-webui-daam

DAAM stands for Diffusion Attentive Attribution Maps. Enter the attention text (must be a string contained in the prompt) and run. An overlapping image with a heatmap for each attention will be generated along with the original image.

Prompt Gallery

<https://github.com/dr413677671/PromptGallery-stable-diffusion-webui

Build a yaml file filled with prompts of your character, hit generate, and quickly preview them by their word attributes and modifiers.

embedding-inspector

<https://github.com/tkalayci71/embedding-inspector

Inspect any token(a word) or Textual-Inversion embeddings and find out which embeddings are similar. You can mix, modify, or create the embeddings in seconds. Much more intriguing options have since been released, see here.

Infinity Grid Generator

<https://github.com/mcmonkeyprojects/sd-infinity-grid-generator-script

Build a yaml file with your chosen parameters, and generate infinite-dimensional grids. Built-in ability to add description text to fields. See readme for usage details.

NSFW checker

<https://github.com/AUTOMATIC1111/stable-diffusion-webui-nsfw-censor

Replaces NSFW images with black.

Diffusion Defender

<https://github.com/WildBanjos/DiffusionDefender

Prompt blacklist, find and replace, for semi-private and public instances.

Config-Presets

<https://github.com/Zyin055/Config-Presets

Adds a configurable dropdown to allow you to change UI preset settings in the txt2img and img2img tabs.

Preset Utilities

<https://github.com/Gerschel/sd_web_ui_preset_utils

Preset tool for UI. Planned support for some other custom scripts.

DH Patch

<https://github.com/d8ahazard/sd_auto_fix

Random patches by D8ahazard. Auto-load config YAML files for v2, 2.1 models; patch latent-diffusion to fix attention on 2.1 models (black boxes without no-half), whatever else I come up with.

Riffusion

<https://github.com/enlyth/sd-webui-riffusion

Use Riffusion model to produce music in gradio. To replicate original interpolation technique, input the prompt travel extension output frames into the riffusion tab.

Save Intermediate Images

<https://github.com/AlUlkesh/sd_save_intermediate_images

Implements saving intermediate images, with more advanced features.

openOutpaint extension

<https://github.com/zero01101/openOutpaint-webUI-extension

A tab with the full openOutpaint UI. Run with the –api flag.

Enhanced-img2img

<https://github.com/OedoSoldier/enhanced-img2img

An extension with support for batched and better inpainting.